Loading...

Checkout using your account

Checkout as a new customer

Creating an account has many benefits:

- See order and shipping status

- Track order history

- Check out faster

Checkout using your account

Checkout as a new customer

Creating an account has many benefits:

The AI-hardware market used to be a one-horse race. In 2025 it’s a sprint of specialists:

That variety is good news—if you can navigate it. As an Australian integrator with 25 years of build-to-order experience and direct partnerships with NVIDIA, AMD, and Intel, DiGiCOR’s mandate is simple: map every customer’s workflow, facility and budget to the hardware that delivers the fastest pay-back, no vendor strings attached.

| Year | Milestone | Lesson for 2025 |

| 1999 | GeForce 256 fires the “graphics vs CPU” arms race. | Faster silicon often starts in gaming. |

| 2006 | NVIDIA ships CUDA 1.0. | Software ecosystems decide long-term winners. |

| 2012 | AlexNet proves GPUs crush deep-learning. | Academic breakthroughs drive enterprise demand. |

| 2020 | A100 & TPU v4 turn AI capacity into an API. | Cap-ex vs Op-ex becomes a daily trade-off. |

| 2024 | Gaudi 3 (Ethernet on die) & MI300 A/X (CPU-GPU fusion) launch. | “One-vendor fits all” is officially over. |

| 2025 | H200 (141 GB HBM3e) & WSE-3 wafer arrive. | Memory capacity—not raw TOPS—is the new bottleneck. |

| Accelerator | Memory / BW | Peak Math* | Power | Where It Pays Off (ROI Lens) |

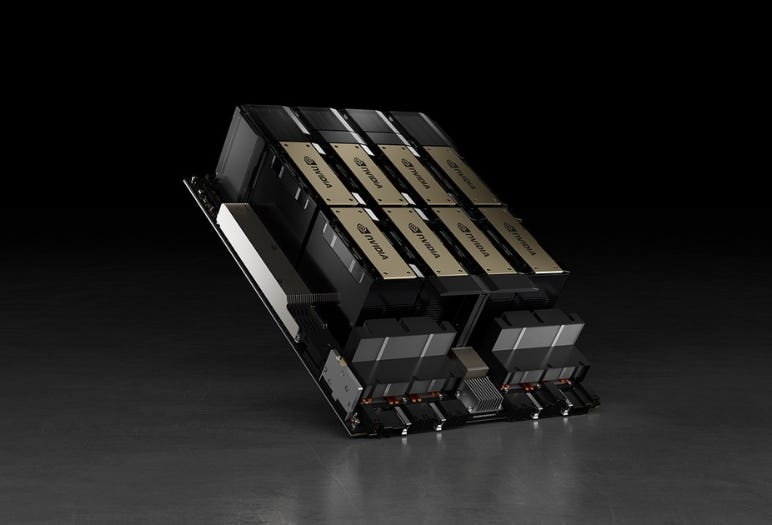

| NVIDIA H100 (PCIe) | 80 GB HBM2e • 2 TB/s | 3.9 PF | 350 W | Fastest “time-to-first-demo” thanks to mature CUDA & MIG. |

| NVIDIA H200 (SXM/NVL) | 141 GB HBM3e • 4.8 TB/s | 3.9 PF | 600–700 W | One-GPU inference for 70-175 B models—cuts latency hardware in half. |

| AMD MI300 X | 192 GB HBM3 • 5.3 TB/s | 5.2 PF | 750 W | Best $/token for bandwidth-bound HPC-plus-AI workloads. |

| AMD MI300 A (APU) | 128 GB HBM3 • 5.3 TB/s | 5.3 PF | 600 W | CPU & GPU share HBM—obliterates PCIe copy overhead. |

| Intel Gaudi 3 | 128 GB HBM2e • 3.7 TB/s | 1.8 PF | 600 W | Lowest cap-ex per token; 24× 200 GbE removes pricey IB fabrics. |

| Google TPU v5e (cloud) | 16 GB HBM • 0.8 TB/s | pod: 100 PetaOPS INT8 | OPEX only | Perfect for burst training or pilot projects without on-prem power budget. |

*Vendor peak FP8/FP16 (with sparsity where quoted).

| Stack | Ready Libraries | Serving Layer | Multi-Tenant Control | Maturity Score* |

| CUDA 12 | TensorRT-LLM, NeMo, DeepStream | Triton | MIG / vGPU | ★★★★★ |

| ROCm 6 | MIGraphX, Shark | Triton-ROCm | SR-IOV | ★★★★☆ |

| SynapseAI 2.3 | Optimum-Habana | TGIS | Multi-Instance | ★★★☆☆ |

| XLA / PaxML | JAX, T5X | Cloud-managed | Project caps | ★★★★☆ |

| Poplar 3.4 | PopVision | PopRT | IPU partitioning | ★★☆☆☆ |

| CSoft | Model Zoo | Cerebras-Inference | Model slices | ★★☆☆☆ |

*DiGiCOR internal score for “time from dev-laptop to production SLA”.

DiGiCOR walks every client through these in a < 1 hr whiteboard session—often saving six figures in avoidable overspend.

| Primary Goal | First Node to Test | Why It’s Likely the Best ROI |

| Enterprise LLM pilot | 4 × H100 PCIe or 8 × Gaudi 3 pod | CUDA speed vs Ethernet simplicity—benchmark both. |

| Edge Vision + XR | Twin L40 S in 2 U | NVENC + Ada RT cores; 350 W air-cooled. |

| HPC + AI convergence | MI300 A blades | Shared HBM erases PCIe bottlenecks. |

| Frontier (> 1 T) research | Cerebras CS-3 lease | 1 WSE < 100 GPUs; OPEX beats CAPEX. |

NVIDIA’s ecosystem still delivers the shortest path from idea to production—that’s why it’s popular. But the best bottom line may belong to AMD’s bandwidth monsters, Intel’s Ethernet pods, or a pay-per-minute TPU. DiGiCOR exists so you never have to gamble on which one.

Bring us your latency target, compliance headache or cooling constraint, and our engineers will line up the silicon that hits your bull’s-eye—green, red, blue, or wafer-sized grey.

Ready to start? Book a 30-minute architecture call or drop by our Bayswater lab. Coffee’s on us. Benchmarks are on standby.